Designing with context

Context is a slippery topic that evades attempts to define it too tightly. Some definitions cover just the immediate surroundings of an interaction. But in the interwoven space-time of the web, context is no longer just about the here and now. Instead, context refers to the physical, digital, and social structures that surround the point of use.

Once, the internet was a parallel universe, quite separate to our own. Those who wished to cross the dimensional gap needed a wormhole. We called that wormhole a PC. The (double) click of a button sent you into the virtual world, and when you were done you returned, stood up, and carried on with your day. As with most sci-fi, this precious technology was first the plaything of the rich: techies, scientists, white collar Westerners.

Today, pervasive connectivity and cheap components have turned technology inside out. The internet is now part of our world. Millions of devices – PCs to smartphones, consoles to wristwatches – act as a canvas, bringing it into the lives of a truly diverse audience across the globe. The divide between the physical and digital worlds has broken down, meaning new contexts of use have come to the fore.

Context is a slippery topic that evades attempts to define it too tightly. Some definitions cover just the immediate surroundings of an interaction. But in the interwoven space-time of the web, context is no longer just about the here and now. Instead, context refers to the physical, digital, and social structures that surround the point of use.

This definition is expansive, but we need only look at daily life to see that context has profound effects. Mechnically, a football match is the same wherever it’s played; yet context skews the odds: a team playing at home receives a proven advantage from their favourable environment, fan support, and potentially even elevated hormonal response.

The digital community has yet to fully understand the facets of the multicontext era. As a result, two stereotypes pervade: the desktop context and the mobile context.

Context stereotypes

The desktop context stereotype is familiar to any web designer, where it’s been the contextual default for years. In this stereotype, the user sits comfortably in front of a ‘proper computer’. The room is well lit, the pencils sharp. Although ringing phones or screaming kids will intrude at times, we think of this context as ideal for tasks that demand deep or prolonged concentration: filling in forms, say, or detailed research.

In the mobile context stereotype, the user is in an unfamiliar setting. Public transport invariably features: “man running after a bus”, and so on. Easily distracted by his environment, our user pulls out his smartphone in sporadic bursts, in search of local information or succinct answers to specific questions. We believe therefore that the mobile context is perfect for location services, wayfinding, or any product that helps users get near-instant value.

Both stereotypes contain morsels of truth. Certainly it’s easier to concentrate while comfortable and static, and smartphone users do play with their handsets during downtime. But the stereotypes are fundamentally lacking.

First, the desktop context is hardly a uniform concept. Not only do desktop computers offer a huge variety of screen sizes, operating systems, and browsers, but the environments we lump together under the desktop stereotype are also diverse. An illustrator’s home office may boast two large screens and a graphics tablet, while a parent may grab a rare moment of laptop downtime on the sofa.

Both users are static, free to concentrate, and easily pigeonholed into the desktop stereotype. Yet they will interact with technology in notably different ways.

The diversity continues to grow. In many countries the web revolution has bypassed the traditional PC, with many citizens first experiencing the web on a phone. The different hardware and infrastructure landscape makes the desktop stereotype unrecognisable to millions.

The mobile context stereotype is equally troubling. The devices commonly labelled ‘mobile’ are already too heterogenous to form a consistent category. People use smartphones, laptops, netbooks, and tablets in lots of scenarios, both static and on the move. Pop a Bluetooth keyboard on a train table and you can fashion a desktop-like setup for your smartphone, even as you hurtle through the countryside. NTT DoCoMo found that 60% of all smartphone data was used indoors, often while the user is watching TV or surfing on a more traditional computer.

(Data from “Mobile as 7th of the Mass Media”, Ahonen T. futuretext, 2008)

The connected world offers a host of potential contexts for the things we make. Different people use our products in different places at different times.

Professor Paul Dourish argues that our very understanding of context is flawed. Rather than it being a stable, knowable state in which an activity takes place, Dourish contends that context is emergent: that is, the activity itself generates and sustains context. ("What we talk about when we talk about context”, Personal and Ubiquitous Computing, 8(1), 19-30). In this model, context is volatile and based on implicit consensus. A risqué conversation at work may suddenly become unsuitable for that context when a manager walks in, or when someone proffers a controversial opinion. A smartphone user may realise a registration form is longer than they want to complete on their awkward keyboard. As the nature of the interaction reveals itself, the user decides it’s too laborious for that context, and gives up.

Stereotypes can’t do justice to the diversity that surrounds today’s technology. To make excellent products that truly understand our users’ contexts, we must look further, and investigate context first-hand. There are two routes. The first is to gather data using the device in question.

Data-implied context

Our devices can speak to us like never before. So surely we should listen? By gathering fragments of sensory data – location, motion, temperature, etc – we can hunt for patterns, and piece together the context of use accordingly. It’s an appealing concept. The data is easy enough to capture (although browsers have poorer access than native apps), and in the quest for ever more impressive technology, the wow factor is hard to resist.

However, the data-implied approach to context can be dangerous. Data cannot assure us of intent. I may be at a cinema to watch a film, but I may also be conducting a health inspection. Confusing correlation (“people at train stations often want timetable information”) and causality (“someone’s at a train station – they must want timetable information”) is a classic logical error, and one that can harm your product. The rabbithole deepens, with the risk of error multiplying with each fresh assumption. Even if you’re 90% sure of each assumption you make, the likelihood of getting five in a row is pretty much a coin toss.

Beside the inherent inaccuracies, data-implied context has other risks. Products that lack transparency about the data they’re using can become privacy pariahs, scaring and infuriating their users (“How did they know? How do I opt out?”).

The approach also views context as static, independent from the activity. As such, it ignores how behaviour changes with time, as people adapt to – and are affected by – their technologies and environments.

These concerns don’t that mean that sensory data is useless. Far from it. The risk lies in the flawed assumptions that can result when sensory data is your sole source of insight. If those assumptions – and their impacts – are trivial, assuming context from sensory data is relatively harmless. However, context is ultimately too subtle and human to be left to machines alone. Great design is built around people – not devices or software – so in addition to learning from data, take time to research contexts of use first-hand.

Researching context

Research trades assumptions for knowledge, boosting confidence in your decisions. Done right, it can also encourage empathy within your team, and lead you down routes you may have never otherwise considered.

There are many research methods to draw upon, but no prescription for choosing the right approach.

It’s always tempting to start with numerical tools like analytics and surveys. Both offer the comfort of volume, but context is a largely qualitative art. Contextual details are slippery, often falling through the gaps between numbers. While quantitative methods are a decent starting point, they’re best when paired with qualitative studies.

Interviews will help you understand users’ motivations, priorities, and mental models. Write a script to cover your main context questions (see DETAILS, below), but deviate from it as interesting points arise. Face-to-face interviews are ideal, since you can build rapport with your participant and learn from their body language, but phone or even IM interviews can work just as well.

The main limitation of interviews – their self-reporting nature – is exacerbated when researching context. Participants may not accurately depict their contexts, or may omit relevant points.

Contextual enquiries allow a closer look. Here, a researcher shadows the participant as a neutral observer, occasionally asking questions to clarify understanding and prompt elaboration. A less formal variant, ideal for products that address everyday needs, is simply to spend some time among the general public. Lurk at the supermarket and spy on shoppers. Take a seat at a popular landmark and watch tourists fumble with maps and street names. You may be surprised at the unexpected ways people manipulate the world around them, and the ingenious hacks they use to get things done.

(Photo by W.D. Vanlue.)

Real-world research is often the only way to learn about how people interact with their environments, and the impromptu solutions they employ.

You can bring a similar mentality to digital fieldwork. Trawl social media to learn what people say and think about particular activities, and spend time in specialist forums to help you appreciate the environments and backgrounds of your users. Immerse yourself in the community and your intended users’ language, mindset, and contexts will inevitably rub off.

Diary studies are ideal for longitudinal research into users’ interactions with a particular product, activity, or brand over time. This makes them particularly useful when you need to understand the contexts that support complex, prolonged decisions. The name comes from the practice of giving participants a diary, to be filled with accounts of the day’s relevant events – but you needn’t be so literal. Want to learn how people choose a car? Try asking users to photograph and comment on car adverts they see, create a scrapbook, or answer a brief daily questionnaire about their latest thoughts.

DETAILS – the seven flavours of context

Research will give you insight into more than just context. You’ll find it valuable in drafting product strategy, choosing which devices to support, planning your communications, and more. But let’s take a closer look at the different types of contextual information you might spot, and what your findings may mean for your design.

Of course, any attempt to classify context is approximate, since the categories inevitably melt together. However, the seven ‘flavours’ of context below cover all the important facets, meaning you can be confident you’ve accounted for context’s primary effects.

Device context

A device’s form and capabilities will shape a user’s approach. Some device features – input methods, screens and other outputs, network connectivity – have weighty implications that deserve their own separate consideration. But how does the user’s choice of device affect the context of use?

In the digital age, ‘form follows function’ no longer holds. A smartphone has enough processing power to launch a rocket, while a supercomputer might just be playing chess. However, there is still some correlation between a device’s form and its likely capabilities. A screen, for example, is likely to dominate the physical form of a device, and hence the tasks it suits.

In 1991, ubiquitous computing pioneer Mark Weiser suggested three future forms for digital devices; tabs, pads, and boards (“The Computer for the Twenty-First Century”, Scientific American, Vol. 265, No. 3. (1991), pp. 94-104). Today’s devices map to this taxonomy in obvious ways. Smartphones and MP3 players are tabs: small, portable devices that originally offered limited functionality, but have expanded in scope with time. Laptops and tablets fit with Weiser’s vision of the pad, and large desktop computers or TVs represent the realisation of the board.

(Photo by adactio.)

Dan Saffer recently proposed extensions to Weiser’s list of device form factors, adding dots (tiny, near-invisible devices), boxes (non-portable devices like toasters and stereos), chests (large, heavy devices like dishwashers), and vehicles. (Designing Devices, Saffer D, 2011.)

Today, only some of these forms are relevant. The internet fridge remains an absurd symbol of the Just Add Internet hubris that plagued the early dotcom years, and we certainly couldn’t run today’s web browsers on a dot. But given technology’s heady velocity, any of these device forms could provide web or app access in the future. A tiny device will always be limited by battery life and a small screen, but it’s easy to imagine using it to check in to a location-based app, or as a portable screenreader speaking the daily headlines. Even today, new cars ship with built-in WiFi hotspots and passenger screens. Within the next few years, the internet – and the things we build for it – will materialise in all sorts of unexpected places. Some devices will of course be better suited to particular tasks. Some forms will be key, others peripheral. But all will be relevant to digital design.

A device’s operating system provides its own software context. Consider what applications and capabilities the OS allows, and look for ways to integrate these into your product. For example, if you know people will use your website on devices that can make phone calls, use the tel: protocol in your markup, allowing users to initiate calls from a simple link.

<p>Contact us on <a href="tel:+320123456789">(+32) 0123 456-789</a></p>

Consider also what happens if a user’s OS lacks capabilities you’re relying on. Have you made provision for phone and tablet OSs that lack full file-handling capabilities? Are your videos available in formats that don’t rely on browser plugins?

OS functions can also be intrusive. For example, incoming phonecalls, system updates, and push notifications may take the user away from your app. Look for ways to help her recover her train of thought when she returns, and consider the risk of data loss. Logout processes that run after a fixed idle time are particularly painful after a long phonecall.

Every operating system also comes loaded with UI conventions. Absorb the relevant interface guidelines for the major OSs and seek to align native apps with the device’s established patterns.

If designing for the web however, prioritise web conventions over native conventions. To mimic one platform is to alienate another. Very few web apps are run on just one OS, and fragmentation shows no sign of slowing. Since users’ upgrade habits differ, even if you expect one OS to dominate your userbase, older versions will introduce their own inconsistencies.

Recreating native design conventions for the web is a painful endeavour. Interaction design patterns – transitions, timings, and behaviours – are fiendish to reverse engineer and replicate. They’ll add bloat to your code, and will need updating whenever the host OS tweaks its styles.

The urge to design web apps that feel integrated with a specific device is understandable, but can be ruinous. If a native feel is that important, build a native app. Web apps should embrace platform neutrality; the web’s diversity is its primary characteristic.

However, it is useful for a site to know what the OS and browser can do. Through the User-Agent (UA) string, a browser will identify its name and version, the OS name and version, and other information such as the default language used. This information can be somewhat helpful, but UA sniffing is notoriously unreliable, since many browsers assume the identity of more popular cousins.

Even if you can rely on the UA string, there’s no fully reliable way to know precise browser and OS capabilities from version numbers alone. The best efforts to date involve a mix of client- and server-side techniques, such as referring to WURFL to learn basic features, followed by client-side feature detection using JavaScript. Libraries like Modernizr can tell you whether a browser supports features like touch events, local storage, and advancedCSS, and drop a cookie containing this information to speed up feature detection next time.

For all their benefits, technical solutions can never replace deep knowledge of your customers and the devices they’ll visit your site with. Don’t limit this insight to what’s in use today. To truly understand device context, a designer must keep up-to-date with new hardware and software, and spot relevant emerging trends.

Device context: questions to ask

- What devices will this product be used on?

- How about in a year’s time? Three? Five?

- What can those devices do? What can’t they do?

- What sort of interactions do these devices suit?

- Are there unique device capabilities we can use to our advantage?

- How does our site work on devices that don’t have those capabilities?

- Are there device capabilities that might make life more difficult? How can we mitigate their impacts?

Environmental context

The physical environment around an interaction is an obvious context flavour, and has become increasingly relevant.

Outdoor environments are more diverse than indoor environments, largely due to weather. Be kind on cold hands by minimising user input and making controls a little larger, and remember that touchscreen users may have to remove their gloves. Sun glare or rain on a glossy screen can hamper a user’s colour perception, reducing contrast. Review your use of colour, typeface, and font size to maximise contrast and legibility. (This is good practice for accessibility reasons too, per Web Content Accessibility Guidelines.)

Weather conditions can also affect what users are trying to do in the first place. Golfers won’t be booking a tee time on a snowy day, but they be looking for indoor practice facilities.

Noisy environments can interrupt users’ concentration. Rely on clear visual cues and don’t ask the user to remember important information from one screen to the next. At the other extreme, do your bit to reduce sound and light pollution in quiet or dark environments. Avoid sound in a library app, and use dark colours for an app to be used in a cinema or opera house; subtle touches that demonstrate you have users’ best interests in mind.

An environment may also include sources of information the user needs to complete the task. A family PC may be laden with Post-It notes and passwords. An office pencil-pusher may refer regularly to a printed price list. Consider what information your users might expect to access in their environment, and what might happen if that information were unavailable.

It’s fun to hypothesise about environmental context, and get swept up in bold predictions about light and temperature sensors. But in practice, the user will adapt to most environmental factors herself: shielding the screen from the sun, muting the device in quiet environments, and so on. Respond too strongly to the environment and your system will become fussy. Worse, unless it’s entirely clear why the system is adapting, it will be incomprehensible, seeming to operate entirely at whim. Rather than trying to solve every problem, try instead to make an understated difference through considerate gestures.

Environmental context: questions to ask

- Will the site be used indoors or outdoors?

- Should weather conditions affect my design?

- What environmental information sources are relevant to the interaction?

- Will a user understand why, and how, my system is adapting to the environment?

- How can I make my product feel natural within its environment?

Time context

Time of use is often linked to the user’s environment, location, and activity. Preliminary data also suggests that certain classes of device also display different patterns of use. Tablets see high leisure use in the evenings, desktop computers have higher than usual use during office hours, while smartphones appear to be used frequently during periods of downtime (commuting to work, etc) and for occasional personal use during the working day, particularly if they lie outside the reach of corporate tracking software.

Relative hourly traffic to news sites per device class. (Data from ComScore.)

Displaying the current time is trivial – redundant JavaScript clocks have cluttered web pages since the 1990s. Time data becomes more powerful when it is used to enhance an app’s functionality. A news service can display the freshest content, a timetable app can display information about the next train, or a hotel website can advertise today’s last-minute discounted vacancies. Some publications have even experimented with a ‘night mode’, using a user’s location and sunset data to work out when it’s nighttime, and applying a darker stylesheet accordingly. A cute touch, if not a useful one.

It can also be helpful to understand the time a user has available to use your product. Data suggests that mobile users interact frequently with handheld devices, but in short bursts. Heavily interactive apps can accommodate this behaviour by breaking tasks into smaller subtasks, and by presenting a few precise results rather than comprehensive sets of information (this ‘recall v precision’ tradeoff is a classic predicament in library science and information architecture). Some complex interactions like file handling or extensive research typically require a longer period of user effort. If appropriate, add estimated completion times or progress indicators (“Step 3 of 12”) for complex tasks, allowing a user to decide whether now is the right time to get stuck in.

Time context: questions to ask

- Are there particular times of day that our app is best suited for?

- What else is happening then?

- How long will the user be interacting with our site for?

- How often?

- How can our design fit those patterns of use?

Activity context

What do users want to do, anyway? Now that technology supports a wide range of activities, the classical human-computer interaction notion of a single, clear user task is too narrow.

Many people use the web with no specific task in mind, or with a complex set of hazy goals that can’t be reduced to a single task. Many activities – researching and buying a car, for instance – will involve multiple visits on multiple devices. Some shorter activities suit certain styles of interaction, and native apps are often designed to address more specific tasks. To support a current physical activity – cooking, wayfinding, etc – a user is likely to use a handheld device, but if the task is sufficiently important, she will use whatever technology is available. Entire books have been written on the New York City subway, while in Africa 10,000-word business plans have been written on featurephones.

One way to scrutinise user activities is to classify them along the spectrum of ‘lean forward’ and ‘lean back’. Is the interaction inherently active and user-driven (researching data for a presentation, say) or more passive (flipping through a friend’s holiday photos)?

Historically, the web has skewed toward lean-forward tasks: not surprising, given its origins in academic research. However, new lean-back uses are emerging, and feature prominently in advertising for newer device classes like tablets. YouTube and Vimeo, for example, have recently experimented with lean-back modes that require minimal user input; ideal for watching queued playlists. But it’s a mistake to assume all lean-back interactions must be TV-like; something author Charles Stross terms the broadcasting fallacy. The web’s capabilities stretch the lean-back concept beyond old consumption models, and into the realm of ambient information. One such example is the glanceable: a single-purpose, low-density app that streams real-time information into the room.

(A glanceable of London’s bus timetables is perfectly at home on the quiet, unobtrusive Kindle. The web comes into the world. By James Darling & BERG.)

The glanceable is an early realisation of calm computing – digital technology that exists peacefully in the physical world without screaming for attention. In time, spare web browsers or app-capable OSs will become commonplace in every home and business. A user can keep a glanceable app running in an unobtrusive place, and have the information he needs at a single glance.

Lean-back experiences generally involve little user input and use highly linear layouts. They deserve minimal interface: no clutter, no alternative pieces of content to grab the user’s attention. They must convey their information in a short, tangential interaction, to a user whose main focus may be elsewhere. Use large font sizes and legible typefaces for textual content, but don’t feel you must aim for the maximum contrast of text and background. So long as the text is legible, a more gentle contrast can allow the information to seep into its environment without overwhelming it.

Activity context: questions to ask

- Do users have simple tasks to fulfil, or a more complex network of activities?

- Are these activities or tasks digital, or do they support real-world activities?

- Does the current activity have a physical component? How can we support that?

- Are the interactions likely to be lean-forward, lean-back, or both?

Individual context

The abilities and limitations of the human body are of course highly significant to design. Henry Dreyfuss’s pre-war efforts to improve design through a deeper understanding of anthropometrics (the measurement of the human body) and ergonomics have had a lasting effect on modern technology. The influence of physical factors on input is significant: more on this in future articles.

Mental context – the user’s personality, state of mind, likes, and dislikes – is more personal and difficult to research, but some patterns are emerging. The form of the device affects not only what the device can do, but also the likely emotional relationship with its user. Public attitudes to smartphones highlight this effect. Carried permanently with the user, or at least within arm’s reach, the smartphone inhabits an intimate, personal space. Edward T. Hall’s research into perceptions of personal space (“proxemics”) suggests that this close area is typically reserved for our best friends, family, and lovers.

It’s hardly a surprise then that people develop emotional connections with their phones. They are friends we whisper our secrets to, and tools that afford us superhuman powers: the first truly personal digital devices.

People respond strongly when this personal space is violated; just look at the public’s hatred of intrusive SMS spam and the vitriolic debates about smartphone platforms, manufactures, and networks. If you’re building systems for use on portable devices, respect people’s choices and the personal nature of this relationship.

Users’ behavioural preferences can help you understand more about their likes and dislikes; these preferences may be explicitly stated in a Settings screen, or extrapolated from previous behaviour such as clickthroughs, saved content, and purchase history. You may be able to learn from past actions to customise the interface, but the approach is more complex than it may seem. Adaptive menus that prioritise previous selections have been a mixed success in desktop software: they can offer usability shortcuts, but if the rationale is unclear users often find them unpredictable.

Any attempt to mould your application to the user’s previous behaviour must be subtle, like erosion or shoes slowly wearing in with time. A 2002 BBC homepage redesign project explored the idea of a ‘digital patina’ that slowly adjusted to a user’s accrued interactions:

(From The Glass Wall – BBC.)

“If the colour of the whole page could change, would it not be possible to allow the user’s interaction to ‘wear a path’ in certain areas of the homepage? It meant that the saturation of the most commonly used part of the page (for each individual) would subtly become more intense over time… providing a richer and more relevant experience.”

Emotional issues such as your users’ mindsets, motivations, fears, and attitudes to technology are understandably difficult to tease out. Your research will prove its value here; listen carefully in interviews for clues about the emotional context a user will bring to the interaction, and look for notable patterns.

Individual context: questions to ask

- Can we use any stated preferences to tailor the system to an individual user?

- Is it appropriate to let users explicitly state preferences for this interaction?

- What sort of emotional connection will users have with our site, and the devices they access it from?

- What mental attitudes do users bring to the interaction?

Location context

Location context is simple to detect automatically, thanks to native hardware APIs and, on the web, the Geolocation API. The API asks a device to calculate its position by whatever means it has available, including GPS, IP address, WiFi and Bluetooth MAC addresses, cellphone ID, and user input. The main output from the API is a pair of longitude/latitude co-ordinates. If you have access to additional geographical data, you can then turn this into more human-friendly and relevant locations, such as city and area names, addresses, or personal statements of location such as ‘home’ or ‘work’.

People spend much of their lives at these habitual locations, but can now bring connected technology wherever they may be. This can create confusion over terminology. My stance is that ‘mobile’ refers to user location, not a device category. Some devices are more portable than others, but a device is only mobile if it’s being used in a non-habitual location.

It’s essential to understand that someone’s choice of device and their current location are not causally related. Not every user who is out and about is distracted or ‘snacking’ on information. Nor is every smartphone user unable to tackle detailed tasks. However, the mobile stereotype does contain grains of truth. People in unfamiliar surroundings generally have more local information needs: a recent estimate claims that 33% of search engine queries from smartphones are related to the user’s location.

Knowing the location context is extremely valuable for certain types of application, such as travel, retail, or tourism. Although portable devices offer the most obvious use cases, location context can still be relevant to users of desktop browsers too. All major browsers offer good support for the Geolocation API; don’t restrict the potential benefit to a subset of your users.

You may also have access to declarative location data: information the user has published about her intent, such as a calendar event, or tweet about her travel destination. Handle this personal data with care, and beware the ambiguity of language. To a Brit, the word Vauxhall represents both an area of London and a popular car marque. Beeston is an area in both Nottingham and Leeds. Like much other contextual data, use declarative data to provide subtle improvements where appropriate, but appreciate the likelihood of error.

Location-aware apps present huge commercial and user experience opportunities, but thoughtless use of location data will stir up trouble. For privacy reasons, the GeolocationAPI spec insists that users must give express permission for a site to access their location; today’s browsers use a confirmation dialog for this purpose. Likewise, operating systems will usually prompt native users upon receiving a geolocation request. Expect users to decline access unless the benefit is immediately clear. If your application will perform better (more relevant results, less hassle for the user) with location access, explain this simultaneously with the request. Never collect location data solely for your own benefit. The gratuitous request will erode user trust, and could even break data protection laws in some countries.

As always, flawed assumptions present the greatest pitfalls. Someone who’s visiting the Indianapolis Motor Speedway on the day of the Indy 500 is probably there for the race; but even then, she could be a spectator, a sponsor, an organiser or a member of security staff. All have different needs. Some APIs (including the Geolocation API) return an accuracy value along with the longitude and latitude – look closely at this figure, consider the possible errors it introduces, and provide the appropriate precision.

Location context: questions to ask

- Do users have location-specific needs?

- Will access to the user’s location improve the service my app can offer?

- How can I best communicate why a user should grant location access?

- Can I present location information in a more human-friendly format than long/lat?

- How can I be sure my location assumptions are accurate?

Social context

The simplest manifestation of social context is who else is nearby to the user. Each individual user will assess whether the social context is appropriate for whatever they’re doing: this may be an almost subconscious decision.

There’s no other way to understand privacy concerns, and the criteria people use to assess suitability, except talking with users and testing your applications with them.

Although some activities demand privacy, others are inherently social. For these, presence of trusted friends is a desirable context. While we typically think of the web as a single-player game, new inputs and contexts suggest new applications that suit two or more users on the same device. Whether single-user or not, many interactions are heavily influenced by social factors. Social systems need simple, effective ways for people to communicate and share, whether in real-time or asynchronously. Save data and state in the background, and provide persistent URLs to allow users to involve others easily.

Products like chat or photo-sharing have obvious social aspects, but if you research carefully you’ll find that most activities involve some social context. Someone booking flights, for instance, may have to compare results and send them to a spouse or boss for approval.

Social networks can be excellent tools for encouraging adoption and improving user experiences, but again you must balance this power with responsibility. If your app asks users to authorise access to a social network (eg for login), it must receive the user’s precious trust. If the app doesn’t behave appropriately, it will face a vicious backlash. Ask for the minimum level of access you need, and never post anything to a user’s social network without their explicit permission.

Finally, consider the likelihood of device sharing. Desktop PCs and tablets are often shared between family members, and in some nations it is often common to share phones among families or even entire communities. Because personal devices contain private data, users frequently add security at the OS level, such as passwords or PIN locks. Counterintuitively, this can make users more lackadaisical about security within a web browser. Since the device is ostensibly secure at the OS level, users may see no sense in logging out of systems after use.

However, users of shared devices may be reluctant to stay permanently logged into your site, or they may use a private browsing mode if the browser offers one. Think carefully about whether you should reveal a history of a user’s interactions, such as previous searches, pages viewed, or whether you should keep it hidden. If you expect a lot of visitors from shared devices, you may wish to offer quick user switching to allow people to leap between multiple profiles.

Social context: questions to ask

- Will the app be used in solo, private contexts, or in public?

- Are there ways to reduce any risk of embarrassment or public discomfort for the user?

- Who else is involved in this activity other than the end user?

- Is there benefit in asking the user to authorise my app with their social networks?

- Does my app protect the user’s sensitive information with sufficient care?

Context design principles

You may have noticed that the first letter of each flavour of context – device, environment, time, activity, individual, location, social – spell a memorable acronym: DETAILS. Use this shorthand to help guide your research and to ask the right questions of your team. Your answers will of course be unique to your project, but general principles can still be instructive.

Context is multi-faceted.

Different flavours of context will dominate at certain points. As Paul Dourish predicts, context is fluid and constantly negotiated. Not all the flavours will be important factors – as so often in design, there are no clear boundaries that separate the relevant from the irrelevant. Use your research, intuition, and testing to choose which contextual factors should affect your design. As a general principle, unless a context flavour notably affects users’ behaviour, it’s unlikely to be a high priority. However, the research and consideration required to reach this conclusion is still valuable, and if circumstances change, that flavour of context may suddenly become pivotal.

Don’t penalise people for their contexts.

Wherever possible, the user should have access to the same functionality and content in all relevant contexts. Never punish someone for using the device of their choice in the manner they want. One sadly persistent example of this error is ‘mdot’ websites that remove useful functionality in the mistaken belief no one will use it on a portable device – a mistake designer Josh Clark likens to excising chapters from the paperback version of a book. To force a user to wait unnecessarily until he returns to a desktop computer is arrogant and lazy. It demonstrates only that you believe you know best, and that you haven’t spent enough time understanding the user or his circumstances.

If, for instance, you choose to create a separate mdot site to accompany your large-screen site, strive for functional parity. Understand and define your core product, then look for ways that different contexts can enhance the user experience. Hide content and functionality only where it is absolutely necessary for a certain context (eg unsurmountable security reasons) or if it genuinely improves the user experience. Use context as a way to reduce complexity or add power, not as an excuse to avoid tricky design challenges.

It is, however, perfectly acceptable to present the same content and functionality in different ways to suit particular contexts. The notion of a unified user experience across multiple devices and contexts is a myth: there’s simply too much diversity. It’s far better instead to aim for coherence – in which all forms of your product hang together to create an easily-understood whole.

Assume gently.

Context is deeply subtle and human. Respect that. It’s exciting to imagine ways that technology can use context to surprise and delight people, but it’s easy to get carried away. Even your most sophisticated algorithms will frequently misinterpret the user’s context. One man’s interminable traffic jam is another man’s peace and quiet.

Do look for ways to improve the humanity of your products through context, but be gentle. If in doubt, aim for stability and similarity between different instances of your product, rather than introducing large variations based on assumptions. Context-aware systems that are too variable are confusing, and make design, implementation, and maintenance far more complex. A light touch – say, a few layout and presentational variations for different screens, and better data upload processes for devices with particular features – will be substantially easier to manage in the future.

Allow adaptability.

If you do make fundamental changes to your product to suit different contexts, inform the user and allow them to override the decision. For example, if you must remove functionality from a small-screen mdot site, allow the user to return to the large-screen site somehow (a link in the footer is the current de facto standard). Although the large-screen version won’t be optimised for that device or context, the user can still get things done.

Some operating systems help the user understand what data has been gathered, using icons to indicate use of the device’s GPS unit, and so on. However, if your site uses contextual data to present different information or views, look also for ways to describe these choices in the interface.

Foursquare does a sterling job of explaining context. This screen alone explains the social context (friends who liked a venue), location context (map & distance), and time context (“This place is busier than usual”) that has underpinned these recommendations.

Revisit your decisions.

Context will remain a slippery subject because context itself is changing. To improve the shelf-life and sustainability of the digital products we design, we must think about flexibility and emergent use. How will people be able to create their own uses for what we build? Some of today’s biggest social networks have evolved through emerging user convention. Although the seven flavours of contexts are timeless, we can barely imagine the permutations of context that will become commonplace in the next ten years. Stop periodically to reassess the landscape, and aim to build flexible systems that can be traversed in new ways. All that’s required is the willingness to experiment and see what new forms of context mean for the future of our technologies.

For an extensive resource list, see delicious.com/cennydd/context.

The incoherence of Black Ops 2

I finished Black Ops 2 yesterday. Is it too much to ask that games make some sort of sense?

I finished Black Ops 2 yesterday. Is it too much to ask that games make some sort of sense?

Treyarch clearly wanted to differentiate itself from previous, formulaic Calls of Duty, but has instead created an awful mess. The contents of my brain after the credits, unencumbered by such things as post-hoc verification:

The year is 2025, but sometimes 1970 or 1989. There’s Noriega, baseball-capped, slouching like a teenager against a wall. He’s oily and diminutive in the way these games usually depict Hispanic people.

The main villain wears a light sports jacket and a facial scar: Abu Hamza with a subscription to Monocle. He planned to be captured, of course. He plucks out his eyeball and smashes it to reveal a SIM card containing a virus that cripples the world’s militaries.

Touchscreens, holograms, and hacking: all drain any sort of momentum (“Wait here while the techs override the door lock!”). A “data glove” whose function is unclear. After rescuing a pilot, you commandeer her plane for a tediously death-defying dogfight over downtown Futureville. When you fly off the unmarked map the scene resets, and you sigh.

A regrettable flirtation with top-down strategy hampered by dopey AI. A gunfight in a nightclub (dancing is still the uncanny valley of CGI) that is still pretty cool because it was ripped off Vice City and has – yes really – a Skrillex soundtrack.

A wheelchair-using veteran clearly cost money. The camera lingers on his expensive, expressive face. He does anguish, then rage. After the credits, he rocks out with Avenged Sevenfold in an embarrassing tie-in that proves that adults weren’t expected to finish this game.

Big Dog evolutions and Parrot drones that kill you from fucking nowhere. Bullets fly fast and penetrate walls but enemies still absorb a few gutshots before falling. A scene featuring horseback riding and Stinger missiles, like a mundane Shadow of the Colossus. The horses shoot lasers from their eyes and are made entirely of QR codes.

And amid this drunken Millennial incoherence, a game engine that, while functionally competent, lacks any kind of embodiment. Rather than being immersed in this ridiculous action, you sit atop it. Even the futuristic guns are limp. A grenade explodes behind you with a flat pop. The grenade indicator is so faint that you find yourself tripping over the damn things at random. You get shot, but it’s not really clear by whom, from where, and why you should care.

For all their gung-ho predictability, the Modern Warfare games feel great. They give great feedback. They throw you into the noise and the light. Their undeniable set-piece excess is still bounded within sensible limits of intelligibility. Black Ops 2, by contrast, is wild, flabby and virtually unfathomable.

On failure

I’m no longer writing Designing The Future Web. Even after 18 months and 25,000 words, the well of knowledge is filling faster than I can draw from it, and it’s become clear that I can no longer make the sacrifices a book demands.

I’m no longer writing Designing The Future Web. Even after 18 months and 25,000 words, the well of knowledge is filling faster than I can draw from it, and it’s become clear that I can no longer make the sacrifices a book demands.

It would be emotionally easier to let the spark fizzle out, dodging questions until no one thinks to ask them any more. But that seems dishonest. It’s right that I should admit failure with the same fanfare as I undertook the project.

Although my research and ideas have resisted being captured in words, they’ve made me a better designer. They’ll still allow me to tell my story and influence the profession through my work.

However, I do plan to publish some of my ideas. I think I have something to contribute, and after so long hoarding opinions for the big reveal, it will also be a relief. At the very least, expect blogging: joyful tracts with occasional idea-flecks.

Failure is embarrassing, of course – but it can also clear the path to other kinds of success. My editor and publisher have both been fantastic, and I thank them for the support they offered me. Now, I’m looking forward to looking forward.

Line management by numbers

Here’s something I was taught a few years ago about how to adjust your management approach.

Here’s something I was taught a few years ago about how to adjust your management approach. I’m paraphrasing slightly.

Score your employee out of ten for:

- Current motivation

- Experience in the role

- Skills

Then look at the lowest number of the three.

- 0-3: adopt a tight, management-heavy approach until performance picks up.

- 4-7: take a supervisory, mentoring approach.

- 8-10: occasional coaching is all you’ll need. These are the good times.

It’s a useful shorthand that reminds you to keep a keen eye on someone who’s green, sub-par, or struggling, and allows you to step away as they find their wings.

A changing tide

It is the hybrid designer – not the specialist – who is most in demand, and every capable visual designer has picked up interaction design fundamentals. The specialists’ differentiation and competitive advantage is shrinking.

(Some half-thoughts based on hunches and radar blips. Go easy.)

I’m starting to feel that we’ve reached an inflection point in digital design.

Specialisation and consultancy were the dominant trends of the last 5–10 years. Experts thrived in high-profile agencies or lived comfortably as independent consultants, while in-house design teams were largely seen as downtrodden, pulled in too many directions, and unable to establish themselves as genuine authorities.

But now the polarity is reversing, and I sense a drift toward centralisation. It is the hybrid designer – not the specialist – who is most in demand, and every capable visual designer has picked up interaction design fundamentals. The specialists’ differentiation and competitive advantage is shrinking. The result is a swing toward direct intervention rather than consultancy, and companies that value breadth and flexibility over individual expertise.

Judge for yourselves whether you think the evidence is strong enough. Peter Merholz – formerly of Adaptive Path, the original UX supergroup now boasting a much-changed lineup – recently listed some noted interaction designers who have made the transition, and we’re all aware of Facebook’s aggressive pursuit of design talent in recent months. Perhaps my joining Twitter is another illustration of my hypothesis. I also speak with many senior interaction design agency staff who dream of the ideal startup role, or of transitioning into product management (which I suspect is far more challenging than most anticipate).

A great agency is still a strong asset to the industry and its clients, just as a bad agency is still harmful – and there are undoubtedly counter-examples to my evidence. However, one thing is clear: the design industry’s focus is no longer on agencies. It is on products.

Perhaps this is a natural evolution. Now that clients understand the value of design, it’s entirely logical for them to build their own capabilities. And maybe we’re also experiencing the limitations of our previous approaches. Responsive design and Agile have forced us to re-evaluate our methods, and we’re finding there are simply no tactical short-cuts for cross-channel and service design: the entire company itself must be designed, which demands internal influence. Money’s certainly a factor too; stock options and acquisition deals can be hard to resist.

But I also wonder if there’s a deeper motivation: a collective mid-career crisis, if you will. A household brand in our portfolio no longer appeals. No one wants to make another campaign site or Groupon clone. Instead, I see a community questioning whether it’s had the impact it dreamed of in its idealist youth. The ‘make the web better one site at a time’ mindset is really just treading water at this point.

One reason I found last year’s Brooklyn Beta so compelling was that I met many attendees who were struggling with this angst, as I was. The sense of shared cross-examination was palpable, and the unspoken conclusion was clear. If we truly believe in the power of design, it’s our duty to apply it where it can have the biggest impact.

I’ve been thinking a lot about scale recently. How can we amplify the effects of what we do? I see three methods:

- Example & education – sharing ideas, successes, and opinions through case study, mentorship, speaking, writing.

- Leadership – assuming positions of authority in organisations (executive level, internal champions), or in public office (politics, pressure groups, professional associations).

- Reach – finding ways to increase the number of users/citizens our designs affect.

I see many recent changes in the design community mapping to the latter two goals. We’ve never had any problems with Example and Education, and long may that continue. But I sense a new attitude of buckling down to change entire organisations, to increase public and governmental awareness of the importance of our work, and to seek out opportunties to affect the lives of millions. This is music to my ears.

A lot’s been written about the alleged decline of client services, and plenty of people are now rushing to its defence. As always, “it depends” is the only reliable answer; context is the key factor in deciding whether to work for, or hire, external consultants. But I do wonder how the agency world will respond to this shifting community focus. How will they manage to stay an attractive option for designers and organisations who are increasingly internally-focused?

There is another possible interpretation: namely, that I’m projecting my own assumptions onto behaviour that could be interpreted any number of ways. But I spy a pattern – however faint – that’s worth further examination. Am I onto something, or just seeing ghosts?

Joining Twitter

Later this month I’ll be joining Twitter as a senior designer, working on the evolution of Twitter’s apps with the London team. It’s Twitter’s first full-time design position in Europe, and as such has huge potential – and undoubtedly some intriguing challenges.

Self-employment has been a great experience. I’ve worked with excellent clients, learned to swim in the deep end of business, and enjoyed the flexibility to balance my time as I wish. I could be comfortable doing it for many more years. But who wants to be just comfortable?

Later this month I’ll be joining Twitter as a senior designer, working on the evolution of Twitter’s apps with the London team. It’s Twitter’s first full-time design position in Europe, and as such has huge potential – and undoubtedly some intriguing challenges.

To me, Twitter is more than just a technology company. It’s a company that is shaping global culture; but one that also appreciates the ethical implications of its work. In short, it’s an irresistible opportunity.

For the next few months I’ll join the curmudgeonly ranks of commuters, until I move up to London later in the year. I’m planning to stay active in the British and European design communities, and will continue work on Designing the Wider Web. Oh, and I’ll finally get to visit San Francisco, and spend some time with one of the best design teams around.

But of course there are plenty of unknown unknowns, and my excitement is mixed with gentle terror. I’ve no doubt it’s going to be fascinating, difficult, rewarding work. Wish me luck.

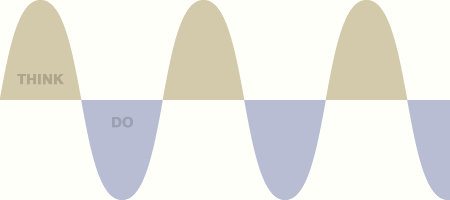

Why I don’t wireframe much

I was going to write a long post, but I think a rough diagram suffices.

I was going to write a long post, but I think a rough diagram suffices.

My life as a unicorn

Last year, the UX uniform I’d worn for a decade started to feel like a straightjacket. I wasn’t learning as rapidly as I once did, and my work had plateaued. I felt I was coasting, and falling victim to dangerous nouns like boredom and arrogance.

Last year, the UX uniform I’d worn for a decade started to feel like a straightjacket. I wasn’t learning as rapidly as I once did, and my work had plateaued. I felt I was coasting, and falling victim to dangerous nouns like boredom and arrogance.

I think the UX industry has found a local maximum; undeniably comfortable, but somewhat short of what it could achieve. I voiced these concerns at the 2011 IA Summit, suggesting that corporate recognition wasn’t the endgame, and that the community should refocus and magnify its efforts on the world’s most pressing problems. One year on, there’s very little I’d change about the talk. While the UX industry has been very successful, and I adore the friends and peers who make it up, I worry it too has begun to coast.

UX no longer felt quite like home, and I yearned for open waters. So I dived in. Moving away from the labels and language of UX, I adopted the title Digital product designer. Great experiences are still my objective, but I wanted to explore beyond the boundaries of what the UX role had become; to use my interest in writing, typography, brand, and graphic design to enhance my work. Not a wish to generalise so much as a wish to specialise in more areas. In particular, I’d come to view the gap between UX and visual design as arbitrary: “You take the wheel, I’ll do the pedals”.

Over the last year I’ve spent long hours studying graphic design, learning more about its techniques and tools, and creating a new role for myself that combined my interaction design expertise with my new visual design skills. In popular digital parlance, such a designer has come to be known as a ‘unicorn’: a rare, flighty being never encountered in the wild. It’s a cute label, and a damaging one. It reinforces silos, and gives designers an excuse to abdicate responsibility for issues that nevertheless have a hefty impact on user experience.

There are of course different flavours of UX person. From the design-heavy position I occupied, the leap to digital product design has been feasible. The mindset is virtually identical. A senior UX designer with practical knowledge of the design process, excellent client skills, and an understanding of ideation and iteration already has many of the key skills required in visual design. Someone whose strengths lie more in research or polar-bear IA may find the gap a bit more daunting.

I’ve found I now have a deeper involvement in a product’s lifecycle, from inception through concept to the end product. I feel far closer to the product than I did previously. This has meant I’ve been taken more seriously on issues of product strategy, seen less as a user-centred advocate and more as someone who can bring a client’s vision to life, and shape a complete product over time. I also can’t deny the ego-massaging pleasure of presenting work that elicits an immediate ‘wow’ – something a wireframe could never do.

I was already well-read about the theory of graphic design, but improving my technical skills has taken no small effort. I’m still working hard on my sketching and visual facilitation skills, but thankfully the software knowledge has come easier. Forcing myself to finally master design software has been a blessed relief. For all the flak Adobe get, Fireworks or Photoshop are so much better suited to UI work than Omnigraffle – although of course they too have serious limitations in an era of fluid design. I’ve also started to experiment with print design, and have enjoyed poking my fingers into more of the Creative Suite.

I’m creating fewer of the classic UX deliverables, and have tried to forge a wider variety of tools for each situation. If the situation demands visual detail, I’m able to pull together a detailed comp. If we need speed, a sketch suffices. For interactivity, I’ve been knocking together scrappy image map walkthroughs, or more solid HTML prototypes. I’ve also spent a lot more time worrying about words and labels, and have again been reminded of their importance in design. I firmly believe that any designer who overlooks the importance of copy, thinking it someone else’s job, is missing a powerful way to improve his work.

But I still have plenty of angles to figure out. My design process has become more pliable, which confers both benefits and disadvantages. I still practice UCD frequently, but I’ve also become more familiar with ‘genius design‘. There’s been lots of expert opinion and less recourse to the user, although in part this is also a property of the startup market I’ve been working in. My clients appear to have enjoyed this flexibility of process. Certainly some companies still believe UCD to be unnecessarily bulky; and it can be hard to disagree (hence the rise of Lean UX). I genuinely don’t know yet whether taking a more fluid approach to process has led to better outputs – I’m still evaluating – but it has certainly broadened my viewpoint.

In moving away from UX, I’ve taken a hit to my reputation. Previously I was fortunate enough to be seen as someone near the top of the UX field; now, I don’t fit so well into established mental models. Some members of the UX community have noticeably edged away from my views, and I don’t get added to the same lists or invited to speak at the same conferences now. I expected this, and have no problem with it, but I do feel sometimes that I’ve lost the safety in numbers that an established community offers. It’s also been difficult at times to explain my angle and how my service differs from others’. However, this has upsides. I’m no longer hired as a UX-shaped peg to fit a UX-shaped hole; instead, my clients hire me for my individual skills.

I’ve also had to fight against expectations of speed, from both clients and myself. Time moves more slowly at the quantum levels of pixels, and my broader remit has meant that my work takes longer. It’s made estimation and project planning trickier, and has also raised issues around pricing. The obvious response to my shift would have been to raise my rates. However, I no longer have a clear market rate to price against, and I’ve been very conscious of not asking for too much while I was still making the transition. Right now I’m undercharging. That will change in due course.

So was my move the right one? For me, yes. I’ve found a far deeper appreciation for the craft of design, and I’ve rediscovered the excitement that had started to ebb away. In this period of massive change in the digital world I feel more flexible and valuable, and I’m positive that I’m a better designer as a result.

However, the digital product design role isn’t for everyone, and I shudder at the thought of this being seen as a manifesto, roadmap, or one of those odious ‘[Discipline X] Is Dead’ posts. Specialisation is still highly important, and many projects will be better off with separate UX and visual roles, rather than chasing unicorns. But personally, I’m glad I made the leap.

Ephemeral ennui

It’s an exhausting treadmill. No wonder ours is largely the domain of the young.

I still love what I do. But on days like today, when I’m woozy and tired, it gets too much.

The grinding of definitions cogs. The all-new responsive adaptive interaction experience. Do Ninja Brogrammers have a collective noun? Change the world! The promise, the plateau, the privacy violations. Five Things Designers Can Learn From X Factor. Make it like Pinterest. Update the firmware, then wait for the bug fixes. Do Not Reply To This Email. Klout scores and expanding Instapaper waistlines.

It’s an exhausting treadmill. No wonder ours is largely the domain of the young.

I still love what I do. But every now and then I have to remind myself that these ephemera – the words, the whirlwind, the white heat – don’t matter.

What matters is making beautiful things. Always.

Low-budget responsive design

“If you have a client that won’t pay for responsive design, get a client that will.”

Today I’m at Responsive Summit, a last-minute gathering of some folks who are interested in responsive web design and its effect on our industry. It’s obviously a topic close to my heart. Martin Beeby has been live-tweeting some snippets of what’s been said, including this one:

“If you have a client that won’t pay for responsive design, get a client that will.”

This quote has, unsurprisingly, not gone down well on Twitter. Responsive Summit has already been accused of being an elitist gathering. The website was tongue-in-cheek but the joke perhaps fell a bit flat, and the attendees are generally a high-profile bunch. So quotes like this, facile and arrogant, make for easy targets.

The quote originated from something I said. I can’t remember my exact words – I’m rushing this post out over lunch – but let me give some context, so you can judge for yourself whether it was as dumb as it sounds.

One of my fellow attendees was explaining to the group that her client budgets generally didn’t allow her to practice RWD, and she was having a tough time explaining the business benefits.

My response was that our is an industry with overwhelming competition at the low end. Everyone’s neighbour’s kid can bash out a site for £100. Companies like 1&1 will sell you a templated site for not much more. However, the companies that are typically practising RWD on large client sites operate at the top end of the market. They’ve carved out a niche as craftspeople creating bespoke solutions. The time and budgets they’re afforded allow deeper work, including some of the detailed intricacies of thorough RWD.

So my point was that, providing you have the skill, it can be easier to find market space and freedom to practice newer techniques by heading up the value chain, not down it. If you desperately want to practise responsive web design and your budgets don’t allow it, you have two options:

1) Do it anyway. This is an attitude close to my heart, and formed the bulk of Undercover User Experience Design’s ethos. There’s been lots of talk today of how RWD has already become a natural part of many people’s workflows.

2) Negotiate higher budgets. This may require working with different clients.

Some people have assumed from the quote that the Responsive Summit has decreed that RWD is the only way a site should be built, and that we should ditch any client who doesn’t drink the Kool-Aid. This is definitely not the case. We’ve already spent a fair bit of time agreeing that for some clients, RWD is a waste of time and money. But if you’re insistent you want to do RWD, you’ll have to either take the resultant budgetary hit yourself or find someone who will fund it.

So that’s the story behind that quote. The day so far has been smart, thoughtful, and useful – it would be a real shame for someone to judge it because of one out-of-context soundbite. Hopefully we’ll be able to share some more of the discussions so that people can build on them, and argue against them, in their own ways.

Out of sight?

I’m sure “Great design is invisible” looks fantastic on a crisp Helvetica poster but, like all slogans, it whitewashes complexity.

I’m sure “Great design is invisible” looks fantastic on a crisp Helvetica poster but, like all slogans, it whitewashes complexity.

The phrase is a favourite of the UX industry, which generally advocates the suppression of a designer’s personal style in favour of universal functionality. The idea of the designer as a benevolent force, steering the user toward her own goal, is appealing – but it’s a mindset that belongs more to the usability era than the voguish age of experience.

Sure, sometimes great design is invisible. But sometimes great design is violent, original, surprising. To deny design’s ability to ask difficult questions, to shock, to flatter, to belittle, is to squander its potential. We mustn’t be afraid to let design off the lead once in a while. In a marketplace of bewildering clutter, products that take a stand – that have a damned opinion – become the most visible.

On travel

But this year – and I suppose this is my 2011 retrospective – I’ve visited five continents and spent a quarter of the year overseas. I’ve visited places I always dreamed of and perfected my security choreography: belt, laptop, liquids in under ten seconds.

I was twenty-seven before I boarded an airplane. As a boy, our family holidays in Wales and Cornwall meant that my GCSE French could only be unleashed on the occasional orchestra tour: memories of sweltering coach trips on which someone always forgot their viola.

But this year – and I suppose this is my 2011 retrospective – I’ve visited five continents and spent a quarter of the year overseas. I’ve visited places I always dreamed of and perfected my security choreography: belt, laptop, liquids in under ten seconds.

The disorientation of travel is humbling. I have to learn the customs, the new machines, and the subway maps. I queue in the wrong places, and walk down the wrong roads. I learn to say sorry in a dozen languages. It’s a valuable lesson that mental models are created the hard way.

It’s also fascinating to see how other cultures interact with each other and with technology. Johannesburg’s barbed wire and Tokyo’s vending machines left a particular impression. Travel reminds me that not everyone has a MacBook and fast WiFi. This year I saw some amazing applications of mobile technology: people making do with the tools they have, routing around infrastructure problems rather than blaming them.

Travel also gives me space to think. For all their bustle, airports and hotels are also places of disconnection. However much I try, I can’t work or sleep on planes, so I take the opportunity to read, or squint at forgettable films. And although a hotel bar and a late night can be fun, I’m often at my most productive after I’ve exhausted the local TV channels and set about something that’s been on my list for weeks.

But perhaps the happiest aspect of travel is that it helps us appreciate what we have. The delicious Heathrow relief of finally being able to express myself with a full vocabulary. The coolness of my pillow on my jetlagged, unshaved face. The more I travel, the more I love this petty, depressed country.

I’d hate to become weary of travel, or so privileged that I see it as a burden. Nor do I want to become one of those terrible bores who travels a lot and wants you to know it. So next year I want to travel less but better. I want to learn the character of the places I visit, not just collect the sights and the hurried snapshots.

What bugs me about content out

Recently there’s been much talk of “content out”, the idea that web design should be inspired by the qualities of the text and images of a site. It’s a healthy idea, but like any slogan, it is open to misinterpretation.

Recently there’s been much talk of “content out”, the idea that web design should be inspired by the qualities of the text and images of a site. It’s a healthy idea, but like any slogan, it is open to misinterpretation.

The web design industry has only recently afforded content its rightful status. We were wrong to relegate content to the role of a commodity – something we could pour into beautifully-crafted templates. In our rush to rectify this balance, we mustn’t overcorrect and deprecate the role of truly creative design.

From an algorithmic perspective, the idea that style and substance are separate is appealing. It allows us to code markup and stylesheets independently, and fits the logical mindset shared by so many techies. But it’s a falsehood. Style and substance are irretrievably linked. Like space and time, they are neither separable nor the same thing – there exists no hierarchy between them, no primacy. One informs the other. The other informs the one.

It’s impossible to perceive content and presentation separately. The two combine to create something more valuable: meaning.

The same content, with very different meanings.

Some of the best-known examples of the content out design principle are blogs from today’s leading digital lights. These sites feature expert typography, harmony and balance. They are undoubtedly beautiful. They also look terribly similar. Book design is the dominant aesthetic, meaning that the content does indeed shine. However, individuality surfaces only in esoteric flourishes. The people who have made these sites are diverse and bold, but these qualities often struggle to surface.

It’s a mistake to let content drive design, just as it was to let design drive content. We mustn’t let the pendulum swing too far. If we are to go beyond mere information and style to create meaning, the two must be partners, feeding from and influencing each other.

Until we see more diversity in the sites that espouse a content out approach, I worry the movement could be too simply characterised as one of minimalism – or worse, faddishness and elitism:

The idea that content can act as the interface is noble. But sometimes you need interface. The interactivity and responsiveness of the digital medium means it excels at interface. Text can often suffice, but it possesses limited affordances. It conveys information and gives instructions well, but it’s poor at conferring mental models, creating subconscious emotions, establishing genre, and suggesting interaction capabilities: things crucial for brand-driven sites or functional applications.

Overly-literal interpretation of content out could create a web of homogeneity. A web that conveys little that a book could not, save for hyperlinks and videos. A web that fails to take full advantage of the digital medium. For all our talk of breaking free of the print design mentality, content out risks reducing the capabilities of the digital medium, in favour of fetishising the craft of print design. That would truly undermine the intent of the approach.

Enter title here

Today I changed my signatures, my profiles, and my label to “digital product designer”.

Today I changed my signatures, my profiles, and my label to “digital product designer”. It was a move I’d planned for a while, but during what became a day of contemplation for the whole industry, I decided the time was right.

I no longer see sufficient distance between what’s labelled “visual design” and what’s labelled “UX design” to limit my specialism. The rhetoric of designing experiences no longer works for me. For now, this new label encapsulates my desire to work on things that people find valuable (as opposed to things that advertise value elsewhere), whatever the channel.

But no big manifesto; it’s a personal choice. They’re just words. Let’s see where they take me.

“Why aren’t we converting?”

A friend from a successful e-commerce site got in touch recently. He’s been steadily redesigning the site, with the help of an external design team. I know the company he’s working with. They’re good. But he hadn’t yet seen the bottom-line rewards he’d hoped for, so he asked for my thoughts.

A friend from a successful e-commerce site got in touch recently. He’s been steadily redesigning the site, with the help of an external design team. I know the company he’s working with. They’re good. But he hadn’t yet seen the bottom-line rewards he’d hoped for, so he asked for my thoughts.

Here’s my response, edited for confidentiality. Perhaps it’ll be useful to others, and I’d also love to hear any suggestions you have.

From: [xxx@yyy.com] to Cennydd Bowles

The site looks a million times better, but unfortunately our conversion rates have actually dropped. There is certainly noise in the data and an increasingly competitive environment but […] do you have any idea why our conversion rate would be worse?

From: Cennydd Bowles to [xxx@yyy.com]

The short answer is “I don’t know for sure”. The long answer is, well, a lot longer and needs me to talk a bit about the nature and philosophy of design. Please bear with me.

Design is inherently less predictable than most other product fields, since it closely involves emotion, comprehension, taste and all those complex, deeply human attributes. That means that design is a gamble. A good designer will improve your odds, but there’s always a chance that their hypotheses (which, after all, is the most any designer can provide) will prove to be false. A solution that works in one context may fail in another. Because there’s not this replicability of process, there can never be scientific ‘truth’ in design; experiments, observation, and iteration are the only way forward.

Much to the design community’s chagrin, sometimes “good design” doesn’t provide the commercial benefits we all expect. Sometimes “bad design” performs better. If I knew why, I’d be a millionaire by now :) I’ve been bitten by this myself – design changes that were “better” by all recognisable theory and good design practice performed worse than the original design. It’s frustrating for all concerned, and embarrassing for the designer.

Figuring out the cause can be difficult too. Introspection of design doesn’t tend to work well – barring major usability problems, it can be tricky to isolate specific points of a design that cause certain actions. The design as a whole has a certain irreducible complexity. So sometimes these surprises just happen, and it’s hard to diagnose the cause. Does that mean design is a poor investment? No. But I would say that it can be riskier than, say, marketing or SEO, which are more linear: generally, put more in the funnel and more trickles out of it.

However, I do suggest seeing user-centred design as something wider than just a means of optimising a conversion rate. While there may not be a noticeable uplift in any specific metric, the raw material of design is frequently intangible: trust, loyalty, engagement, etc. These things are much harder to measure, but they still make themselves felt indirectly in other metrics: support costs, referral rates, customer retention, and so on. Separating the effect of design from these long-term figures is, of course, pretty much impossible, but the long-term aggregated data makes it clear that the effect is genuine (see Apple, etc). Strong design also gives you a better platform to innovate from, and all that good biz school stuff.

But all this philosophising doesn’t answer your question, and I appreciate that the pressures of the bottom line mean you’d hope for a more realisable output for your investment. So let me take a stab at some more direct suggestions:

Natural dip

There’s always a performance dip after releasing a new design, no matter how good or bad it is. This is probably because existing customers’ mental models of how things work have been broken, and it always takes a little time to reestablish those patterns. What can be surprising is the length of this pattern – I know of a company that allows six months to pass before they evaluate the success of a redesign, so the smoke has truly cleared. This particular organisation has a very high number of users, so the effect is naturally prolonged, but do make sure you’re confident there’s still not a temporary effect lingering.

Details

The things that could make the difference in a design might be the little details. I don’t know exactly what your designers gave you, but check to see whether you’ve overlooked small points that might reduce friction. The easiest way to do this would be to ask your designers to run a quick review on what you’ve put live, to make sure it’s working the way they expected it to.

Usability testing